In advance of an election year that is only getting started, Meta said that it will mark content produced by AI.

This can filter out false material, but it may also motivate AI content producers to change their strategies in order to avoid detection.

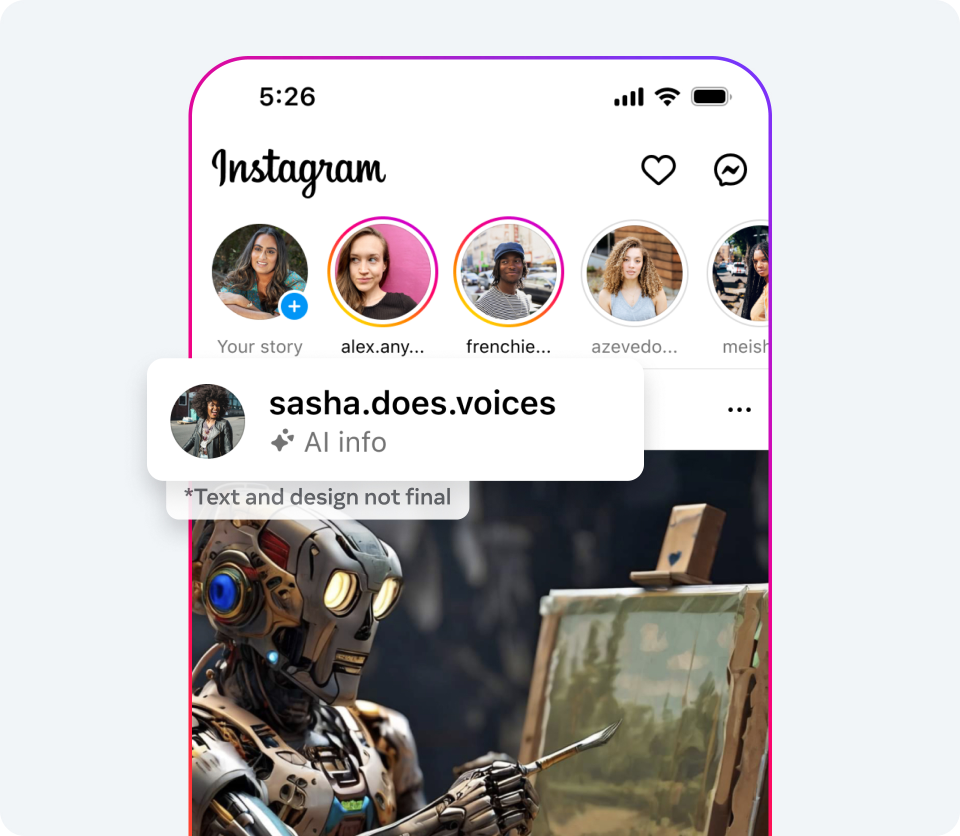

In order to create uniform technological standards that will identify material made with AI and raise an alarm when it does so on Facebook, Instagram, and Threads, Meta has been collaborating with industry partners.

READ MORE: Users’ Ad-Free Tier On Meta Will Show How Hungry They Are for Privacy

The labels will be released before of multiple forthcoming elections, though the corporation has not disclosed the exact date of launch.

“We’re developing this feature right now, and we’ll start applying labels in all languages that each app supports in the upcoming months,” a statement from Meta read. “We intend to stick with this strategy over the course of the upcoming year, which will see several significant elections held across the globe.”

READ MORE: Amazon And Meta Collaborate To Improve Advertisers Conversions

In order to improve its AI identification capabilities, Meta intends to understand more about the production of AI content and how it is distributed.

Not all IT behemoths are attempting to categorize AI material. Google said in November of last year that it would roll out labels on YouTube and its other sites. President Biden’s October executive order, which mandates safeguards against malicious AI, including the use of watermarks to identify information, was followed by this. Numerous businesses have already committed to putting safeguards in place and creating guidelines for safe usage.

READ MORE: 32 States Sue Meta, Claiming It Intentionally Made Kids Depend on Social Media

With its Meta AI tool, Meta already adds labels to AI content. These labels include watermarks that are stored in the image’s metadata and can be seen or unseen. However, AI-generated music and video are not included in the tracker system.

Rather, Meta depends on producers to reveal when they use AI to produce a video so that the platform can identify it. If creators don’t comply, they risk fines or having their accounts disabled. On the other hand, the newly developed tools are more comprehensive. In order to categorize material across Google, OpenAI, Microsoft, Adobe, Midjourney, and Shutterstock, Meta is developing technologies to find the invisible metadata markers “by scale.”

While tracking methods and new tools are beneficial for weeding out false material and informing consumers when content is fake, bad actors might create new tools of their own to get around security measures. Similar issues with new venues opening up when cracking down on unauthorized downloading were experienced by the music and film industries. An uphill battle lies ahead for social media businesses and their members as new labels may give rise to a surge of devious strategies designed to fool newly developed AI-detecting systems.

Radiant and America Nu, offering to elevate your entertainment game! Movies, TV series, exclusive interviews, music, and more—download now on various devices, including iPhones, Androids, smart TVs, Apple TV, Fire Stick, and more.