Meta said on Tuesday that it is trying a number of new generative AI tools, one of which can take still images and turn them into full-on video ads.

In a different test, the aspect ratio of a movie is changed by creating the unseen pixels automatically in every frame. That way, marketers can easily use a video made for Facebook on Instagram and the other way around. Meta already has a tool for expanding static pictures that works in a similar way.

It’s not a surprise that Meta is putting a lot of effort into video and AI. As of Q1, 60% of time spent on Instagram and Facebook was spent watching video material, such as short-form Reels, longer-form videos, and live videos.

READ MORE: TikTok Joins The AI-Driven Advertising Pack, Competing With Meta For Ad Money

Meta says that marketers get a 22% better return on investment (ROI) when they use AI features in Advantage+, Meta’s AI-powered ad platform for automated shopping campaigns.

Lessening the load

But many marketers, especially small businesses, don’t have the time, money, or editing skills to feed the video monster.

The use of videos on our platform is growing, and we expect it to keep growing quickly, said Tak Yan, VP of product management for monetization AI, at a press event in New York City on Tuesday during Advertising Week.

But, “brands are telling us that it is much harder and takes a lot more resources to make video creative,” he said.

Meta has been spending billions to improve AI so that it can make the process of making assets easier.

More than a million marketers used at least one of Meta’s generative AI ad tools in September to make 15 million ads.

Meta’s generative AI ad tools are being used by more marketers and agencies. These companies share their thoughts, which Meta then uses to create new features and bug fixes.

Yan said that one important piece of feedback is that marketers “are keen” on generative AI tools that better use their brand’s voice and tone.

It doesn’t matter how simple it is to make a movie or picture if it doesn’t match the brand’s style.

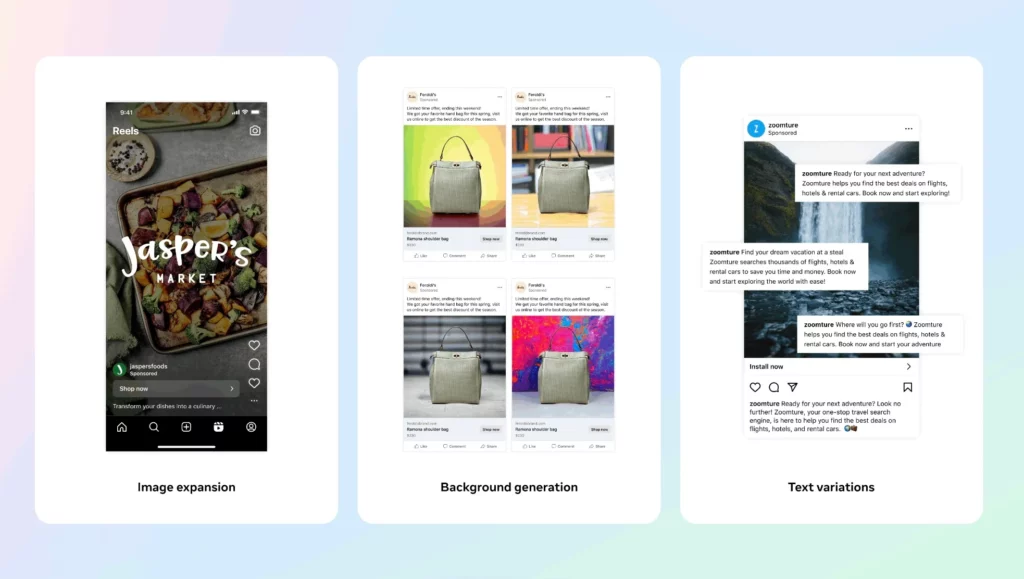

Meta released a new tool earlier this year that lets advertisers make different versions of text and images. These can be made by texting prompts or by having the tool create different picture backgrounds based on the text that is entered. Meta has also started testing uploading logos so that its model can make pictures that are more specific to brands.

“This is feedback we hear all the time,” Yan said. “That’s why this is so important to us, and we work on it all the time.”

Creator partner up

Meta also cares a lot about helping artists, of course with AI-powered ad tools.

Another speaker at Tuesday’s press event was Nicola Mendelsohn, head of Meta’s global business group. She said, “I think we’re entering a new era of creator and customer engagement, one that is powered by the very powerful combination of AI and video.”

Meta is trying a few new features that will make it easier for brands and creators to work together. One of these is the ability to add a creator testimonial to partnership ads, which is a way for advertisers to get more attention for their content from a creator’s handle.

READ MORE: Live Translations For Meta Ray-Bans Are Available

Advertisers can already start using author content in their Reels collection ads, along with their brand’s handle.

Advantage+ catalog powers both forms. This is a new name for what used to be called “dynamic ads.”

Mendelsohn said that marketers will be able to customize and vary their ad creative more if they use AI to help creators and brands work together.

It will also help creators be a part of a plan that is always on, she said. “People are interacting with creators more and more; they want to hear from creators.”

Meta has already begun slowly releasing its new dynamic AI features that are focused on videos through Ads Manager’s Advantage+ creative option. By the beginning of next year, they’ll be used by more people.

Radiant TV, offering to elevate your entertainment game! Movies, TV series, exclusive interviews, music, and more—download now on various devices, including iPhones, Androids, smart TVs, Apple TV, Fire Stick, and more.